Bit depth quantifies how many unique colors are available in an image's color palette in terms of the number of 0's and 1's, or "bits," which are used to specify each color. This does not mean that the image necessarily uses all of these colors, but that it can instead specify colors with that level of precision. For a grayscale image, the bit depth quantifies how many unique shades are available. Images with higher bit depths can encode more shades or colors since there are more combinations of 0's and 1's available.

Every color pixel in a digital image is created through some combination of the three primary colors: red, green, and blue.

Each primary color is often referred to as a "color channel" and can have any range of intensity values specified by its bit depth.

The bit depth for each primary color is termed the "bits per channel." The "bits per pixel" (bpp) refers to the sum of the bits in all three color channels and represents the total colors available at each pixel.

Confusion arises frequently with color images because it may be unclear whether a posted number refers to the bits per pixel or bits per channel. Using "bpp" as a suffix helps distinguish these two terms.

Most color images from digital cameras have at least 8-bits per channel and so they can use a total of eight 0's and 1's. This allows for 28 or 256 different combinations—translating into 256 different intensity values for each primary color. When all three primary colors are combined at each pixel, this allows for as many as 28*3 or 16,777,216 different colors, or "true color." This is referred to as 24 bits per pixel since each pixel is composed of three 8-bit color channels. The number of colors available for any X-bit image is just 2X if X refers to the bits per pixel and 23X if X refers to the bits per channel.

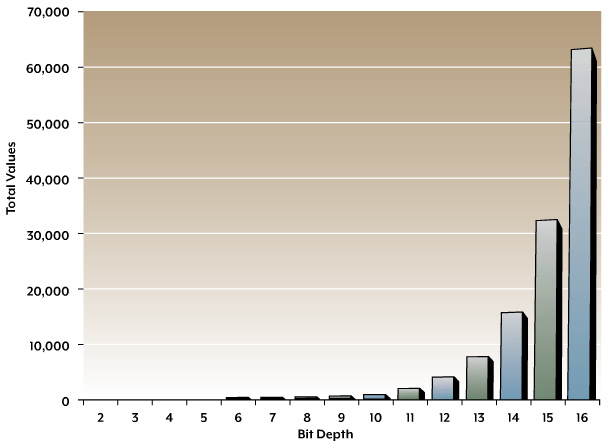

The following table illustrates different image types in terms of bits (bit depth) and total color values available.

A single bit can store two values (ostensibly zero and one, but for our purposes it is more useful to think of this as black or white), whereas 2 bits can store four possible values (black, white, and two shades of gray), and so on. Digital image files are stored using either 8 or 16 bits for each of the three color (red, green, blue) channels that define pixel values, and HDR (high dynamic range) images are processed and stored as 32-bit images.

8 Bit vs. 16 Bit

The difference between an 8-bit and a 16-bit image file is the number of tonal values that can be recorded. (Anything over 8 bits per channel is generally referred to as high bit.) An 8-bit-per-channel capture contains up to 256 tonal values for each of the three color channels, because each bit can store one of two possible values, and there are 8 bits. That translates into two raised to the power of eight, which results in 256 possible tonal values. A 16-bit image can store up to 65,536 tonal values per channel, or two raised to the power of 16. The actual analog-to-digital conversion that takes place within digital cameras supports 8 bits (256 tonal values per channel), 12 bits (4,096 tonal values per channel), 14 bits (16,384 tonal values per channel), or 16 bits (65,536 tonal values per channel) with most cameras using 12 bits or 14 bits. When working with a single exposure, imaging software only supports 8-bit and 16-bit-per-channel modes; anything over 8 bits per channel will be stored as a 16-bit-per-channel image, even if the image doesn’t actually contain that level of information.

When you start with a high-bit image by capturing the image in the RAW file format, you have more tonal information available when making your adjustments. Even if your adjustments—such as increases in contrast or other changes—cause a loss of certain tonal values, the huge number of available values means you’ll almost certainly end up with many more tonal values per channel than if you started with an 8-bit file. That means that even with relatively large adjustments in a high-bit file, you can still end up with perfectly smooth gradations in the final output.

Working in 16-bit-per-channel mode offers a number of advantages, not the least of which is helping to ensure smooth gradations of tone and color within the image, even with the application of strong adjustments to the image. Because the bit depth is doubled for a 16-bit-per-channel image relative to an 8-bit-per-channel image, this means the actual file size will be double. However, since image quality is our primary concern we feel the advantages of a high-bit workflow far exceed the (relatively low) extra storage costs and other drawbacks, and thus recommend always working in 16-bit-per-channel mode.