Intelligent

Information

Retrieval

CSC 575

Assignment 4

Due:

Thursday, March 13

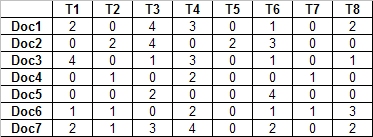

- Consider the following document-term matrix containing raw term frequencies

(See Excel Spreadsheet).

- Construct a term-term distance matrix using Euclidean distance as

the measure.

- Determine the binary term relationship matrix using a distance threshold of

4.00,

and then construct the graph corresponding to this matrix. Note: there is an edge

between each pair of nodes whose Euclidean distance is less than or equal to

4.00.

- Using the above graph, perform clustering based on the Clique algorithm.

- Using the above graph, perform clustering based on the Single Link algorithm.

- Consider again the same document-term matrix for the previous problem. In

this problem we focus on clustering documents.

- Starting with documents 1 and 2 in Cluster 1, and with documents 5,

6 and 7

in Cluster 2, perform K-means clustering of the documents using K = 2 (show the

reallocation of the documents to clusters

after each iteration). Use the cosine similarity measure in the clustering

algorithm.

- Perform clustering of documents using the one-pass (single-pass)

technique (show your work). Use dot product as your similarity measure,

and use a pre-specified similarity threshold of 10. Allow for overlapping

clusters if an item satisfies the similarity threshold for more than one

cluster. The algorithm for

this techniques was given lecture notes on

clustering. For an example of how this

technique is applied see single-pass.html.

- Read the paper Hyperlink Analysis for the Web by

Monika Henzinger, Research Director at Google, and answer the following questions

(in each case, briefly discuss your answers or provide short explanations).

- What are the basic assumptions behind the hyperlink analysis algorithms?

- What is meant by connectivity-based ranking, and how does it relate to co-citation analysis?

- What are some of the primary applications of hyperlink analysis in Web information retrieval?

- Briefly describe the PageRank algorithm used by the Google search engine.

- Briefly describe the HITS algorithms. What are some of the problems associated with

this algorithm, and how might one resolve these problems?

- How can one spam hyperlink-based search engines? Are they subject to spamming

attacks as keyword based approaches? If so, can you think of some approaches to reduce

or counter such attacks?

- Hyperlink Analysis.

- Consider the following Web pages and their linkage

structure:

Page A has links to pages B and C

Page B has a link to Page C

Page C has a link to Page A

Run the PageRank algorithm on this subgraph of pages (see slides 50 and 51

of Lecture 8 for the algorithm and an

example). Assume that

a = 0.15. Simulate the algorithm for

three iterations and show PageRank scores twice for each iteration, both before

and after normalization.

- Consider the following Web pages and their linkage structure:

Page A has links to pages C, D, and E

Page B has a link to Page D and E

Run the HITS (Hubs and Authorities) algorithm on this subgraph of pages (see

slides 34 and 35 of Lecture 8 for the

algorithm and an example).

Simulate the algorithm for three iterations and show the hub and authority

scores (represented as two vectors over A, B, C, D, E) twice for each iteration,

both before and after normalization.

Back to Assignments

|